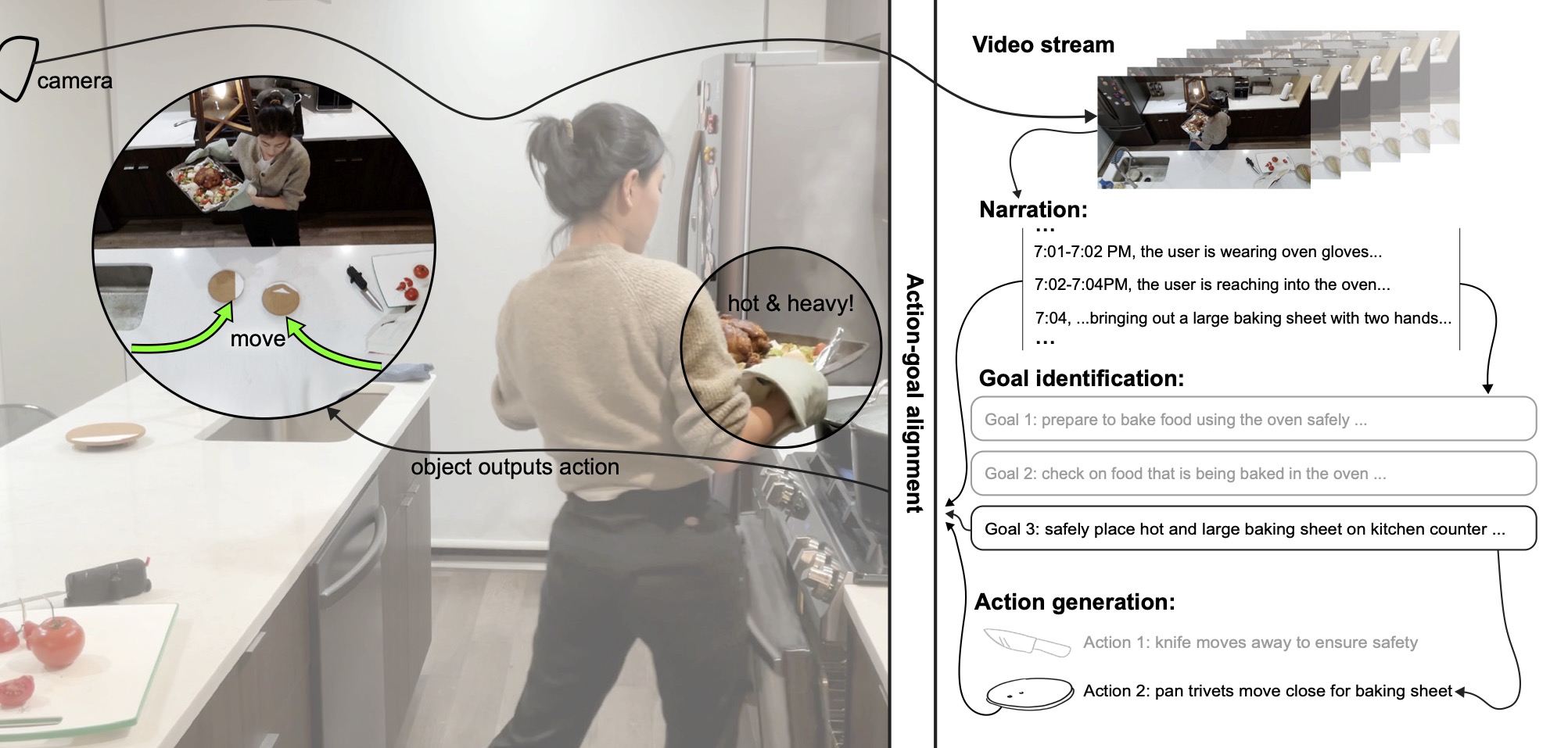

Users constantly interact with physical, most often passive, objects. Consider if familiar objects instead proactively assisted users, e.g., a stapler moving across the table to help users organize documents, or a knife moving away to prevent injury as the user is inatten- tively about to lean against the countertop. In this paper, we build on the qualities of tangible interaction and focus on recognizing user needs in everyday tasks to enable ubiquitous yet unobtrusive tangible interaction. To achieve this, we introduce an architecture that leverages large language models (LLMs) to perceive users’ environment and activities, perform spatial-temporal reasoning, and generate object actions aligned with inferred user intentions and object properties. We demonstrate the system’s utility provid- ing proactive assistance with multiple objects and in various daily scenarios. To evaluate our system components, we compare our system-generated output for user goal estimation and object action recommendation with human-annotated baselines, with results indicating good agreement.

Publication

Violet Yinuo Han, Jesse T. Gonzalez, Christina Yang, Zhiruo Wang, Scott E. Hudson, Alexandra Ion. 2025. Towards Unobtrusive Physical AI: Augmenting Everyday Objects with Intelligence and Robotic Movement for Proactive Assistance. In Proceedings of UIST ’25. Busan, Republic of Korea. Sept. 28 - Oct. 01, 2025. DOI: https://doi.org/10.1145/3746059.3747726